Vulnerability Management in the Vibe Coding Era: AI-Generated App Cybersecurity

AI-coded software brings common exploitation risks into your org. Learn how runtime protection can help.

Published on

TL;DR: AI-coded software brings common exploitation risks into your organization at an uncommon speed and scale. Protect your environment by monitoring software behavior at runtime.

Generative AI platforms like ChatGPT, Claude, Cursor, and Copilot are empowering employees, with or without coding backgrounds, to create custom software by simply describing what they need in natural language.

For instance, an employee might type:

“Build a dashboard to track and share our sales performance data by region and product line.”

Many executives view this trend as an opportunity to get things done faster. In some companies, management strongly supports enabling employees to work around problems by building custom dashboards, scripts, and other software that get deployed in an organization’s environment.

Speaking to this audience, Inc. Magazine now refers to this trend (vibe coding) as “the Future of Programming.” Searches for “vibe coding” have surged by 6,700% over the past three months—a clear sign of its growing popularity.

AI-generated software may accelerate innovation, but it also introduces serious security risks. Here’s why that should concern every organization.

(Mostly) The Same Old Risks, Just Amplified

Approximately one in four (26%) security professionals surveyed at this year’s RSA conference said that AI code generation and vibe coding are the most pressing or high-stakes issues that AI creates for their organization.

But what exactly are they concerned about?

At its core, the risk profile of AI-generated applications resembles the familiar software vulnerabilities that IT and security teams already combat, such as hard-coded secrets, weak authentication, missing input validation, and vulnerable dependencies.

A growing body of real-world research backs this up: studies of AI coding assistants have found vulnerable patterns in up to 40% of generated code samples, users with AI help often ship less secure code, and hallucinated AI-driven packages and scripts have already caused supply-chain exposures and even production data loss. AI hallucinations have even created a new kind of attack vector known as “slopsquatting.”

The problem with AI-generated software is not that these risks exist, but that systems create them by default, repeatedly, and in a way that will grow your attack surface if left unchecked.

The primary cybersecurity issue with LLMs is that they generate “typical” vulnerabilities at an unprecedented scale in apps that are developed and deployed outside the pipeline.

Traditional developers using an AI assistant will still pass their code through established software testing workflows and AppSec tools. But when non‑developers spin up dashboards or software via natural‑language prompts, this software bypasses standard controls, becoming the next wave of shadow IT.

Early survey data shows that the majority of companies are worried about this trend: 60% of organizations lack confidence in detecting unregulated AI deployments.

Unknown AI-Generated Software Creates Cybersecurity Risk

Consider this requirement from a recent Series B startup’s non‑technical job posting:

“Build and ship using tools like Retool, Bubble, Zapier, Vercel, or custom scripts without waiting on Engineering.”

While most SaaS platforms operate outside the organization’s direct control, it’s the custom scripts that end up installed inside your environment, where they can quietly introduce security risks. These tools often access internal data and systems without the typical guardrails that Engineering teams provide, such as oversight, security review, or version control. That’s a recipe for trouble.

The riskiest AI‑generated software shares several characteristics:

- Developed by employees without a formal security review

- Launched outside established IT processes

- Often connected to sensitive data

- Highly likely to be integrated with other business systems or APIs

- Abandoned or adopted without permission or oversight

- Poorly documented, making it hard to audit or maintain

- Lacking proper authentication, access control, or logging

- Prone to overexposing data or functionality to unauthorized users

Essentially, AI-generated apps can often behave like shadow IT—flying under the radar, hard to detect, and even harder to secure. As employees generate more software without involving Engineering, the risk compounds.

Almost 3 in 4 (73%) of cybersecurity leaders already report experiencing security incidents due to unknown or unmanaged assets.

Looking ahead to 2026 and beyond, the real risk vulnerability teams face due to AI is more of the same. A surge of software is slipping past official inventories while maintaining access to sensitive internal systems.

What Doesn’t Work to Secure Unknown AI-Generated Software

Completely locking down your environment isn’t realistic or even possible.

Security and IT teams are already under business pressure to allow AI-generated software and shadow IT workarounds, so an outright ban would be met with resistance and likely ignored.

The only viable path to reducing the risk from this new generation of shadow IT applications is monitoring for risks as they evolve.

However, most traditional vulnerability scanners can’t keep up.

AI-generated software doesn’t have CVEs or known signatures. It doesn’t show up in asset inventories, rarely gets scanned, and often exists entirely outside the scope of scheduled assessments.

This software won’t show up on an expense report, either. Nor will the majority of it be observable from the outside via a public API. AI-generated software typically runs internally, uses private integrations, or operates on employee devices. As a result, it often remains invisible to both financial oversight and most external monitoring tools.

That’s why vulnerability management teams can’t rely on traditional scanners or even the latest External Attack Surface Management (EASM) tools to catch AI-generated software risks. These tools weren’t built to monitor what’s invisible, improvised, or internally embedded.

What Does Work: Runtime Vulnerability Management of AI-Generated Software

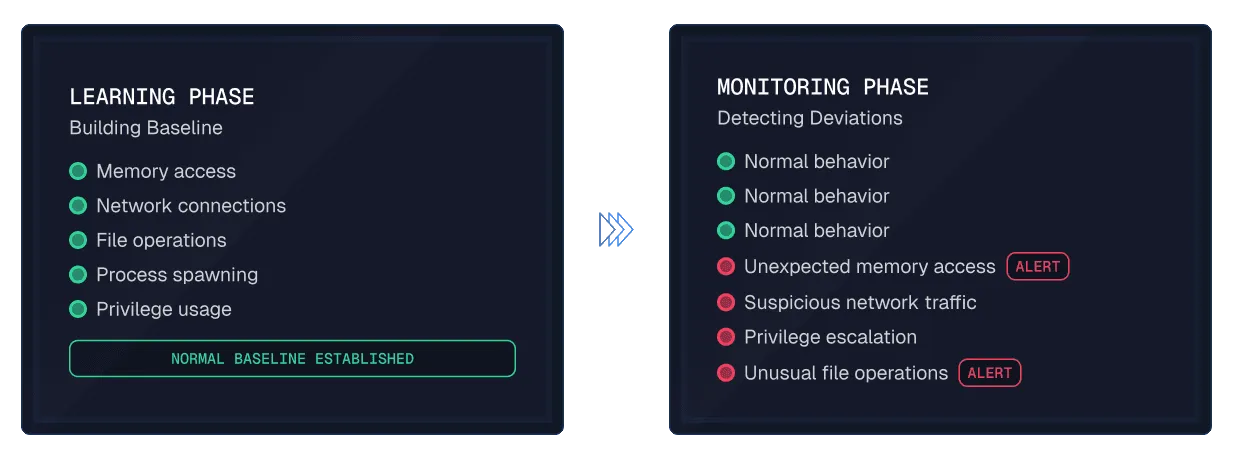

Enterprise security must now account for the IT habits of business users, not just developers, who build tools that interact with customer data, APIs, and internal systems. In this new reality, runtime monitoring is essential because pre-deployment controls alone can’t manage what security teams don’t even know exists.

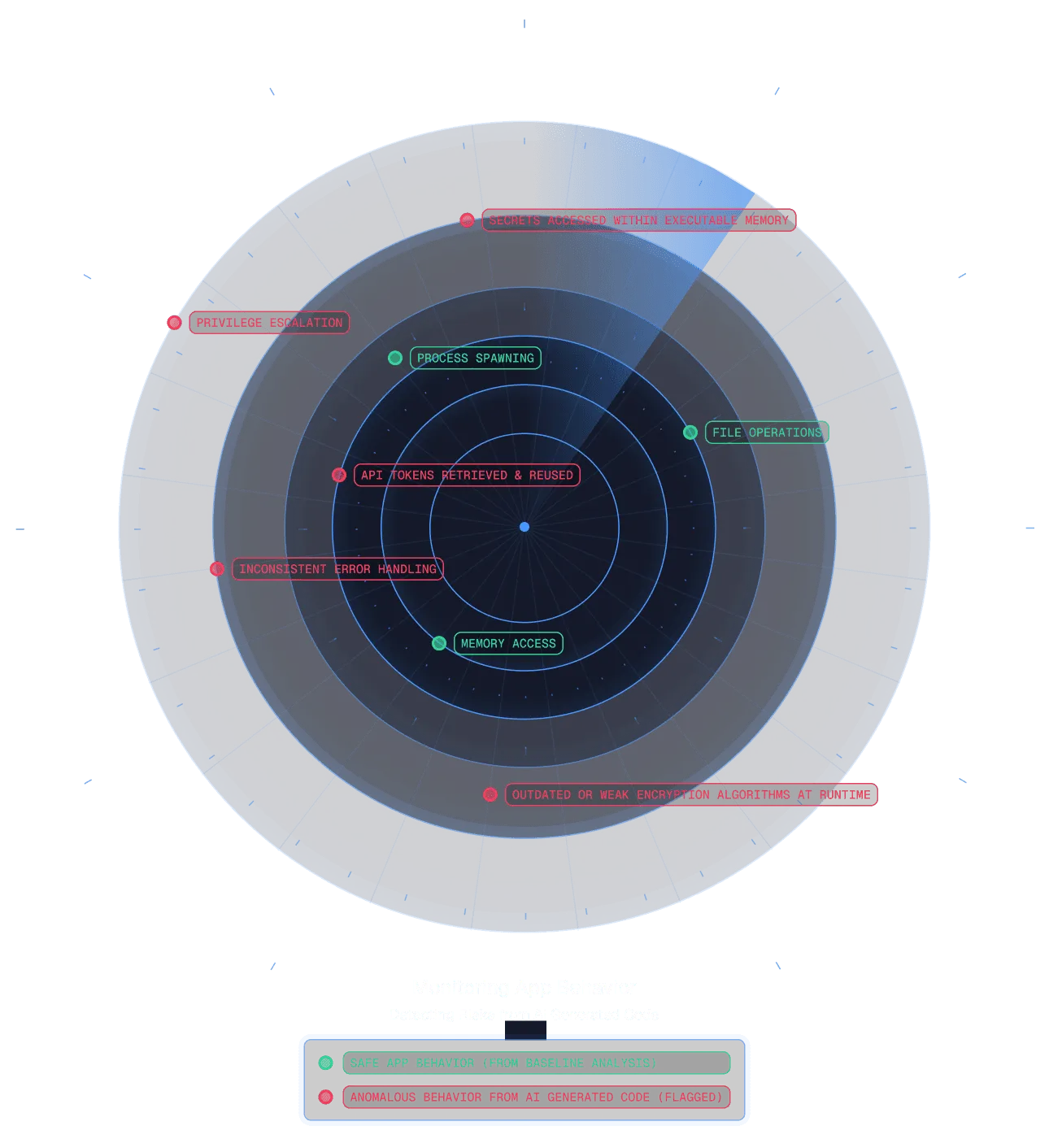

Runtime Vulnerability Management (RVM) identifies insecure behavior in running software, detecting risk based on what an application does, not just what it is.

An RVM solution can flag dangerous behaviors from AI‑built software, such as:

- Retrieving secrets with overly broad permissions

- Accessing sensitive memory regions unexpectedly

- Initiating insecure outbound connections

- Requesting elevated privileges

- Exhibiting known malware patterns

RVM does all this and more without needing the AI-generated app to be listed in your asset inventory or changing how your teams code, deploy, or run software. That makes Runtime Vulnerability Management ideal for securing AI-generated software and shadow tools that would otherwise go undetected.

Secure AI-Generated Software with Spektion

Spektion can secure AI-generated software, wherever it’s deployed within your environment.

Unlike traditional tools, Spektion detects risky behavior and vulnerabilities in real time, even when software is built and run outside of official IT channels.

This means you get immediate protection for software that would otherwise go unnoticed and unmonitored.

Book a demo to see for yourself how we stop shadow IT and AI-generated software risks.